SPR-specific Hints

1. Using GPT4All in teh Network

If you want to install the ChatGPT4All-Server in your Network, you can change the URL that is used by the SPR with this command:

' Use your update IP-Adress in the URL

$$URL=http://127.0.0.1:4891/v1/completions

AIL.Change GPT4All Url|$$URL

2. Changing the used Model

If you want to change the used Model, you can use the

AIL.Set Model|Replit

AIL.Set Model|Wizard Uncensored

AIL.Set Model|Hermes

Command. Above are three examples. Use the name that is displayed in Green in the Download-Section behind the AIC.Set Model-Commands.

This is the same name that you can see in the GPT4All GUI - Menu.

Exceptions and how to use them:

For some Models the automatic switching seemed not to work as today (tested 01.07.2023).

This may work in later Versions fo GPT4All. Namely these are:

"Vicuna (large)" - ggml-vicuna-13b-1.1-q4_2.bin

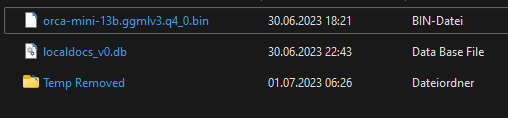

"Orca (Large)" - orca-mini-13b.ggmlv3.q4_0.bin

These Models will not be used if there are other Models available.

These Models will be used if no other Models are available.

In case you want to use exactly these Models, you can:

1. End the GPT4All GUI.

2. Make an Folder inside the Models Folder

3. Move all other Models inside that Folder so that only the wanted Model is left

4. If no other Model is there, only this Model is used, no matter which Model you specify.

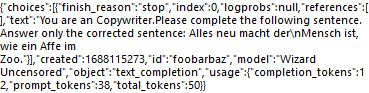

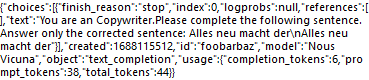

3. Check the RAW Output

If you take a look into the Raw-Output using "AIC.Get Raw Output" you can see which Model was really used together with some other Information.

4. Train the AI with your own Data

This is a special field that has no special support using SPR-Commands at this time.

Details see: https://atlas.nomic.ai/

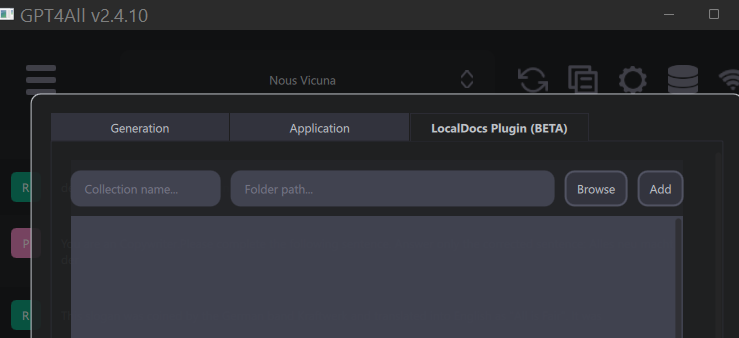

5. Use the "Local Docs" Feature

LocalDocs is a GPT4All plugin that allows you to chat with your local files and data.

It allows you to utilize powerful local LLMs to chat with private data without any data leaving your computer or server.

When using LocalDocs, your LLM will cite the sources that most likely contributed to a given output.

GPT4All allows you to search local docs like PDF's etc.

For this you have to add a Folder where these documents are, together with a "Collection Name" to the System.

It will automatically be referenced.

In your Models-Folder you will find a Database that references the Files in your "Local Docs".

This way the Model can access them.

![]()

Enabling LocalDocs

1. Download and install the most recent version of GPT4All Chat from the GPT4All Website.

2. Navigate to Settings and select the LocalDocs tab.

3. Set up a folder on your computer to serve as a collection containing files that your LLM should access. Feel free to modify the contents of this folder as needed. Your LLM will dynamically adapt to access the newly added files.

4. Initiate a chat session with any LLM (this includes external models like ChatGPT, but be cautious as data may be transmitted outside your device).

5. Click on the database icon located in the top-right corner and choose the collection you want your LLM to refer to during the chat session.

LocalDocs Capabilities

LocalDocs grants your LLM the ability to be informed about the contents of your documentation collection. However, not all prompts or questions will make use of your document collection for context. If LocalDocs is utilized in your LLM’s response, you will notice references to the document snippets that were accessed by LocalDocs.

LocalDocs can:

- Search through your documents based on your prompt or question. If your documents contain information that could be relevant in answering your query, LocalDocs will attempt to make use of snippets from your documents to provide context.

LocalDocs cannot:

- Respond to broad metadata queries such as “What documents are you aware of?” or “Provide information about my documents.”

- Summarize an entire document (e.g., "Summarize my Magna Carta PDF").

For solutions to common problems, please consult the Troubleshooting section.

6. Sample Script:

GPT4All will automatically load the required Model and switch to the Model that was asked for.

You can see that the Model is been loaded, if GPT4All is busy but the CPU-Usage in the Task Manager is still low.

Once the Model starts working you will see the CPU Usage to go up (depends on the Core-Settings in Settings).

If the wanted Model is already loaded, the CPU will go up immediately.

Here is a Sample Script that will change the Model by name.

$$LOG=?exeloc\Output.txt

DEL.$$LOG

$$WOA="ISP.NSP.WSP.GSP.SSP."

$$WOB="GTO.JMP.JNJ.JNF.GSB.JSR.JIV.JIZ.JNZ.PRR.JLE.JME.JRR.JIT.JNT.JIS.JIE.VBS.JNS.JRS.JNR.PWS.JNP.JFP.JTP.JTN.JIO.JNO.JAC.JNC."

FOR.$$NUM|1|8

AIC.Set MaxToken|1024

GSB.Lab_SetModel

AIC.SetModel|$$MOD

AIC.Set Number|2

AIC.Set Temperatur|1

GSB.Write_Log|Model: $$MOD

$$TXT=Act as my Assistant.

$$TXT=$$TXT Below is a Line of words, each word separated with a Dot.

$$TXT=$$TXT Count and Sort the words alphabetically always comparing the first and the second letter of each word.

$$TXT=$$TXT Tell me how many words are in that Line.

$$TXT=$$TXT Do not generate code. Do not repeat yourself. Use english language.

$$TXT=$$TXT Show me the words in sorted order, in one Line separated by dots.$crlf$

$$TXT=$$TXT $$WOA

AIC.Ask GPT4All|$$TXT|$$RET

GSB.Write_Log|$$RET

AIC.Get Several|5|$$RAW

GSB.Write_Log|Used Model: $$RAW

DBP.---------------------

NEX.

ENR.

'-----------------------------------------------------------

:Lab_SetModel

SCS.$$NUM

CAN.1

$$MOD=Wizard Uncensored

CAN.2

$$MOD=Hermes

CAN.3

$$MOD=Snoozy

CAN.4

$$MOD=Replit

CAN.5

$$MOD=Nous Vicuna

CAN.6

$$MOD=Groovy

CAN.7

$$MOD=ChatGPT-3.5 Turbo

CAN.8

$$MOD=ChatGPT-4

CAE.

$$MOD=$$MOD

ESC.

RET.

'-----------------------------------------------------------

:Write_Log

VAV.$$OUT=§§_01$crlf$

ATF.$$LOG|$$OUT

DBP.$$OUT

RET.

'-----------------------------------------------------------

ENR.

'===========================================================

Another Sample Script that will change the Model by number.

Note that the API-Key for the OPENAI API is saved in the Script folder using the Save_Key-Command.

$$LOG=?exeloc\Output.txt

DEL.$$LOG

$$WOA="STW.SCW.SAO.NAV.WTW.WCW.CAW.WFM.MAW.WPR.WPT.SIR.WFV.GCT.AVF.TVI.TVF.UNI.SMH.AGR.AGF.AFT.AFF.AMS.LBE.GSW.GRW.GTE.LBO.RBO.ANA.CAP.WLC.WRA.WRC.RRA.WIK.LAP.LVI.SWP.SWS.CLW.DBC.SFW.WFP.LFP.WTP.LTP.RIC.RCC.RAT."

GSC.$$WOA|.|$$ANZ

GSB.Write_Log|SPR counted: $$ANZ Elements.$crlf$------------------------------$crlf$

FOR.$$NUM|1|9

AIL.Set MaxToken|2048

AIL.SetModel|$$NUM

AIL.Set Number|1

AIL.Set Temperatur|1

AIL.srp|1

AIL.snb|1

GSB.Write_Log|Model: $$NUM

$$TXT=Please analyze the given string of three-letter words, each ending with a dot: $crlf$

$$TXT+$$WOA

$$TXT+$crlf$Count the number of words in the string and provide the result.$crlf$Sort the words in alphabetical order and display the final result.

DBP.$$TXT

AIL.Ask GPT4All|$$TXT|$$RET

GSB.Write_Log|$$RET

AIC.Get Several|5|$$RAW

GSB.Write_Log|Used Model: $$RAW

DBP.---------------------

NEX.

ENR.

'-----------------------------------------------------------

:Write_Log

VAV.$$OUT=§§_01$crlf$

ATF.$$LOG|$$OUT

DBP.$$OUT

RET.

'-----------------------------------------------------------

ENR.

'===========================================================

Conclusion

While GPT4All models may not match the prowess of OpenAI’s offerings,

they come with the distinct advantage of being local, thus incurring no additional costs.

They are still quite capable and suitable for handling smaller tasks.

Like with any AI system, it's advisable to use computers that have a high number of CPU cores and substantial VRAM on the graphics card to optimize performance.