We will be utilizing a sample script to analyze the performance of different language models in handling data sorting and counting operations.

It's important to recognize that large language models (LLMs) like GPT-3 are inherently built on a statistical foundation that predicts the likelihood of the next word based on the preceding text.

Consequently, tasks such as sorting extensive datasets or counting a large number of elements are not the strong suit of these models.

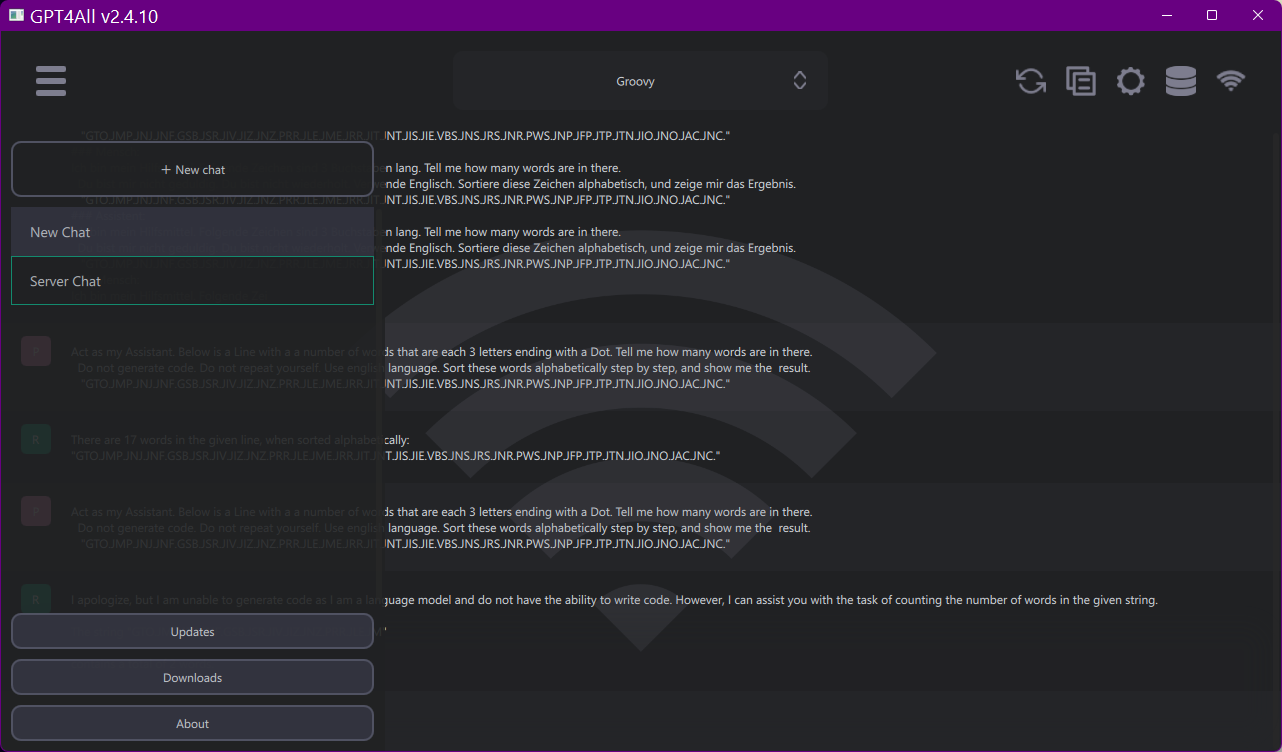

This is the GUI of GPT4All in "Server Chat" View, where yo ucan see what the Server is doing.

In this analysis, we'll also include the response from OpenAI's GPT-4 for reference.

As I do not have direct access to GPT-4 through my API key (its not yet publicly available here for anybody),

the response from GPT-4 will have to be obtained via chat and then manually inserted below.

For Reference we have the result from GPT-4 here:

:

XOR. - XOR - Encryption

Amazing enough, GPT-4 got the result perfectly right and therefore is the reference in this test.

Here is the structured format of the test with the other models:

Here is the Sample Script that will call al the Models in GPT4All:

$$LOG=?exeloc\Output.txt

DEL.$$LOG

$$WOA="GTO.JMP.JNJ.JNF.GSB.JSR.JIV.JIZ.JNZ.PRR.JLE.JME.JRR.JIT.JNT.JIS.JIE.VBS.JNS.JRS.JNR.PWS.JNP.JFP.JTP.JTN.JIO.JNO.JAC.JNC."

GSC.$$WOA|.|$$ANZ

GSB.Write_Log|SPR counted: $$ANZ Elements.$crlf$------------------------------$crlf$

FOR.$$NUM|1|7

AIC.Set MaxToken|1024

GSB.Lab_SetModel

AIC.SetModel|$$MOD

AIC.Set Number|2

AIC.Set Temperatur|1

GSB.Write_Log|Model: $$MOD

$$TXT=Act as my Assistant.

$$TXT+ Below is a Line with a a number of words that are each 3 letters ending with a Dot.

$$TXT+ Tell me how many words are in there.$crlf$

$$TXT+ Do not generate code. Do not repeat yourself. Use english language.

$$TXT+ Sort these words alphabetically step by step, and show me the result.$crlf$

$$TXT+ $$WOA

IVV.$$NUM>6

GSB.Call_GPT

ELS.

AIC.Ask GPT4All|$$TXT|$$RET

EIF.

GSB.Write_Log|$$RET

AIC.Get Several|5|$$RAW

GSB.Write_Log|Used Model: $$RAW

DBP.---------------------

NEX.

ENR.

'-----------------------------------------------------------

:Lab_SetModel

SCS.$$NUM

CAN.1

$$MOD=Wizard Uncensored

CAN.2

$$MOD=Hermes

CAN.3

$$MOD=Snoozy

CAN.4

$$MOD=Replit

CAN.5

$$MOD=Nous Vicuna

CAN.6

$$MOD=Groovy

'CAN.7

' $$MOD=ChatGPT-3.5 Turbo

'CAN.8

' $$MOD=ChatGPT-4

CAE.

$$MOD=$$MOD

ESC.

RET.

'-----------------------------------------------------------

:Write_Log

VAV.$$OUT=§§_01$crlf$

ATF.$$LOG|$$OUT

DBP.$$OUT

RET.

'-----------------------------------------------------------

' We do this separate as the other way sometime4s the GUI seems to crash.

'-----------------------------------------------------------

:Call_GPT

$$MOD=gpt-3.5-turbo-0613

AIC.SetKey|File

AIC.SetModel_Chat|1

AIC.Ask_Chat|$$TXT|$$RET

RET.

'-----------------------------------------------------------

ENR.

'===========================================================

Test Summary (done by GPT-4 )

•Smart Package Robot Elements Counted: 30

•Models Tested: Wizard Uncensored, Hermes, Snoozy, Replit, Nous Vicuna, Groovy and GPT 3.5-Turbo

•Expected Result: 30 words, sorted: "GSB.GTO.JAC.JFP.JIE.JIO.JIS.JIT.JIV.JIZ.JLE.JME.JMP.JNC.JNF.JNJ.JNO.JNP.JNR.JNS.JNT.JNZ.JRR.JRS.JSR.JTN.JTP.PRR.PWS.VBS."

Test Details

1. Model: Wizard Uncensored

•Answer: Counted 10 words that end with a dot.

•Evaluation: The model's output was incorrect. It undercounted the number of words and did not provide them in sorted order.

2. Model: Hermes

•Answer: Counted 15 words, not in sorted order.

•Evaluation: The model's output was incorrect. It undercounted the number of words and did not provide them in sorted order.

3. Model: Snoozy

•Answer: Counted 26 words ending with a dot, sorted alphabetically.

•Evaluation: The model's output was partially correct. It undercounted the number of words but sorted them alphabetically.

4. Model: Replit

•Answer (Translated from German): Counted 30 words ending with a dot, sorted alphabetically: "GSB, GTO, JAC, JFP, JIE, JIT, JIV, JIZ, JLE, JME, JMP, JNC, JNF, JNJ, JNO, JNP, JNR, JNS, JNT, JNZ, JRR, JRS, JSR, JTP, JTN, NZR, PRR, PWS, RRR, VBS."

•Evaluation: The model's output was almost correct. It counted the correct number of words and sorted them alphabetically, but included "NZR" and "RRR" instead of "JIO" and "JIS".

5. Model: Nous Vicuna

•Answer: Counted 17 words, no word list provided.

•Evaluation: The model's output was incorrect. It undercounted the number of words and did not provide the list of words.

6. Model: Groovy

•Answer: Counted 30 words, sorted alphabetically.

•Evaluation: The model's output was incorrect. It counted the correct number of words but did not sort them correctly, and also included repetitions.

Model: gpt-3.5-turbo-0613

•Answer: There are 30 words in the given line. The words were sorted alphabetically step by step. The final sorted list is:

•GTO.JMP.JNJ.JNF.GSB.JSR.JIV.JIZ.JNZ.PRR.JLE.JME.JRR.JIT.JNT.JIS.JIE.VBS.JNS.JRS.JNR.PWS.JNP.JFP.JTP.JTN.JIO.JNO.JAC.JNC.

•...(Shortened)

•GSB.GTO.JAC.JIE.JIO.JIT.JIV.JIZ.JLE.JME.JMP.JNC.JNJ.JNO.JNP.JNS.JNT.JNZ.JRR.JRS.JSR.JTP.JTN.JFP.JNR.JNS.JNF.JIE.JIS.JRS.JNP.JIZ.JAC.

•Evaluation: The model's output is incorrect. It provided the sorting steps but did not achieve the expected final sorted order "GSB.GTO.JAC.JFP.JIE.JIO.JIS.JIT.JIV.JIZ.JLE.JME.JMP.JNC.JNF.JNJ.JNO.JNP.JNR.JNS.JNT.JNZ.JRR.JRS.JSR.JTN.JTP.PRR.PWS.VBS." by the 15th step.

Also, there seems to be a repetition in the words towards the end.

Conclusion

The Replit model provided the closest result to the expected output, with the gpt-3.5-turbo-0613 model showing the steps of sorting but not achieving the final expected sorted list.

It is important to verify and possibly post-process the outputs of language models to ensure accuracy in specialized tasks.