MiniRobotLanguage (MRL)

AIC.Set_MaxToken

Set the number of Input- and Output-Token for the LLM

Intention

The AIC.Set_MaxToken command acts as a powerful dial in controlling the richness and extent of content generated by the LLM (Large Language Model) AI.

Picture this: you're asking a wise sage a question, and you have the ability to control the length of the sage's response - that's essentially what this command does!

By default, think of the AI as a somewhat reserved character; it usually limits itself to a concise response. For instance, with OpenAI, it’s like the AI has an internal pact to keep responses capped at around 256 tokens (the default). Tokens, by the way, are chunks of text that can be as small as a single character or as long as a word, like 'a' or 'apple'.

Now, let’s say you're not just looking for a quick answer but a more elaborate one, almost like asking a storyteller to weave a rich tapestry of words.

This is where "AIC.Set MaxToken" swings into action. By tweaking the `max_tokens` parameter, you're essentially forceful nudging the AI to either expand or condense its creative canvas. Besides that you can include the length of the Output that you expect also in the Prompt.

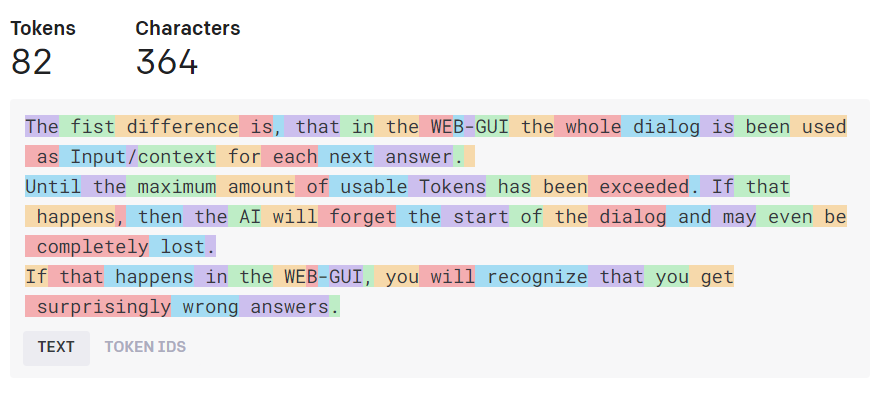

To learn more about Tokens you can use the Open AI Tokenizer.

For example:

- Set `max_tokens` to 50, and you might get: "The cat sat on the mat."

- Set it to 200, and it could turn into:

"On a sunny afternoon, the mischievous cat, with its glistening fur, found solace on an antique mat, which had stories woven into its fabric."

But, be cautious; if you let the AI go too wild, it might give you a lot more than you bargained for, like an epic befitting a tome. Also, you don’t want the AI to start rambling and lose focus, giving you irrelevant or redundant information.

Now, let’s talk about costs. Imagine tokens as currency - the more tokens you use, the more you’ll need to pay. So, if you're on a budget or managing resources for a larger project, judicious use of "AIC.Set MaxToken" is key. It's like fine dining; you want to savor each bite (or in this case, token) for the richness it brings, without overindulging.

Moreover, different AI models come with their own specialties and pricing, like choosing between a sumptuous buffet or an à la carte menu. Managing the `max_tokens` parameter allows you to optimize the selection of AI models based on the quality, efficiency, and cost that align with your objectives.

In summary, "AIC.Set MaxToken" is like the conductor of an orchestra, ensuring that each section comes together harmoniously to create a symphony that is just the right length and tempo. Whether you're looking for a succinct answer, a detailed explanation, or a narrative masterpiece, this command holds the key to unlocking the AI’s potential, while also keeping an eye on the purse strings.

Imagine you’re using a magical quill that writes stories, answers questions, or generates reports. However, this quill has an inkpot (the AI model) that gets used up with every word written. The "AIC.Set MaxToken" command allows you to determine how much ink to use for each task.

In the world of AI, this ‘ink’ is measured in tokens. A token can be as small as a single character ('a') or as long as a word ('apple').

By default, the AI is set to use a limited amount of ink, say enough for 256 tokens. This usually results in short and concise responses.

But what if you need more detailed responses? Here’s where “AIC.Set MaxToken” comes into play. By adjusting the max_tokens parameter, you can tell the magical quill to use more ink for a more elaborate composition.

Especially if you work with Code, the amount of Tokens can never be large enough, yet there are limitations that come from the AI-Architecture.

Model Name |

Max Tokens (Chat Endpoint) |

Max Tokens (Completion Endpoint) |

gpt-3.5-turbo |

4096 |

Not Applicable |

gpt-3.5-turbo-16k |

16384 |

Not Applicable |

gpt-4 (8K) |

8192 |

Not Applicable |

gpt-4 (32K) |

32768 |

Not Applicable |

text-davinci-003 |

Not Applicable |

4096 |

davinci |

Not Applicable |

2048 |

curie |

Not Applicable |

2048 |

babbage |

Not Applicable |

2048 |

ada |

Not Applicable |

2048 |

However, there is a caveat - this magical ink is not free!

Imagine you have a prepaid ink pot. The more ink (tokens) the quill uses, the faster your balance depletes.

This is exactly how AI cost management works. Each token has a cost associated with it, and the more tokens you use, the higher the cost of your API call.

For instance:

If you set max_tokens to 50, it’s like writing a brief note. Cost-wise, imagine it as buying a small coffee.

Set it to 200, and you’re looking at a more detailed piece, akin to a short story. The cost now may be comparable to a fancy latte with all the toppings.

It’s also important to know that, like coffee shops, different AI models have different pricing. A highly sophisticated model might produce richer content but at a premium cost, while a basic model could be more economical for simple tasks.

To make the most out of your ink pot (and budget), you want to strike a balance. Use the “AIC.Set MaxToken” command judiciously to ensure you’re getting the quality and detail you need without unnecessarily draining your resources.

In summary, as an end-user, think of “AIC.Set MaxToken” as your way to control the AI’s verbosity and depth. It’s like having a volume knob and a quality selector, combined with a budget manager. Turn it up for richer content but be mindful of the costs, or dial it down for quick answers while saving your ink (and coins) for when you really need them.

The more tokens used in input and output, the higher the cost will be. It's essential to manage tokens efficiently to control costs.

Model |

Context Window Size |

Input Cost ($/1K tokens) |

Output Cost ($/1K tokens) |

Description |

GPT-4 |

8K tokens |

$0.03 |

$0.06 |

Broad general knowledge and domain expertise, capable of following complex instructions in natural language and solving difficult problems with accuracy. |

32K tokens |

$0.06 |

$0.12 |

||

GPT-3.5-turbo |

4K tokens |

$0.0015 |

$0.002 |

Optimized for dialogues, performance on par with Instruct Davinci. Useful for tasks like drafting emails, writing code, answering questions, creating conversational agents, and more``oaicite:{"number":1,"metadata":{"title":"Chat (beta) |

16K tokens |

$0.003 |

$0.004 |

||

DALL-E 2 (image generation) |

Default: 256x256 pixels |

Varies based on usage |

Varies based on usage |

Generates images; default image size is 256x256 pixels up to 1024x1024 |

As Prices are subject to change at any time, please always look at the Open AI Web-Site to check for the actual Models and Prices.

Syntax

AIC.Set_MaxToken|P1

AIC.Set MaxToken|P1

Parameter Explanation

P1 - numeric value or Variable, containing a number

Example

'*****************************************************

' EXAMPLE 1: AIC.-Commands

'*****************************************************

' Set OpenAI API-Key from the saved File

AIC.SetKey|File

FOR.$$LEE|0|11

$$RET=

' Set Model

AIC.SetModel_Chat|$$LEE

' Set Model-Temperature

AIC.Set_Temperature|0

' Set Max-Tokens (Possible lenght of answer, depending on the Model up to 2000 Tokens which is about ~6000 characters)

' The more Tokens you use the more you need to pay. But the longer Input and Output can be.

AIC.SetMax_Token|300

' Ask Question and receive answer to $$RET

$$QUE=Act as a mathematician.Calculate x for the formula "5*x^3=1450". Do it step-by-step

AIC.Ask_Chat|$$QUE|$$RET

CLP.$$RET

MBX.Model: $$LEE $crlf$$$RET

NEX.

:enx

ENR.

Remarks

-

Limitations:

-

See also:

• Set_Key

•