MiniRobotLanguage (MRL)

AIC.Ask_Chat

Ask or instruct the most advanced Open AI models that are available for the "Chat endpoint" and expect Return.

Intention

The AIC.Ask_Chat command is the command to send a Question or Instruction to the most advanced Open AI models and receive an answer.

You will need to get your Open AI-API Key here: AI - Artificial Intelligence Commands before being able to use this command.

Use the AIC.Set_Model_Chat command to specifying the OpenAI model you want to use for chat-based conversations.

There are multiple other commands which can be used to change the environment for the AI.

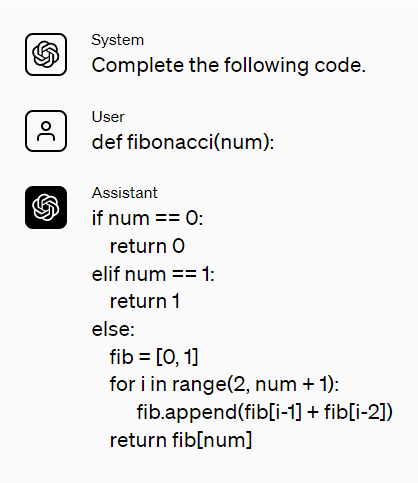

Sample from OpenAI how to use the Chat-Completion Endpoint.

Once all preconditions are set, the usage of this command is as simple as:

$$QUS=Act as 3 different Persons with multiple Qualifications in the field of System Administration.

$$QUS=$$QUS let each of the 3 Persons write a Script that will examine the harddrive.

$$QUS=$$QUS then evaluate all 3 results and give me the best result-Script.

AIC.Ask|$$QUS|$$ANS

MBX. Here is the Script:$crlf$$$ANS

ENR.

Using the SPR Command is different from chatting in ChatGPT via the Internet.

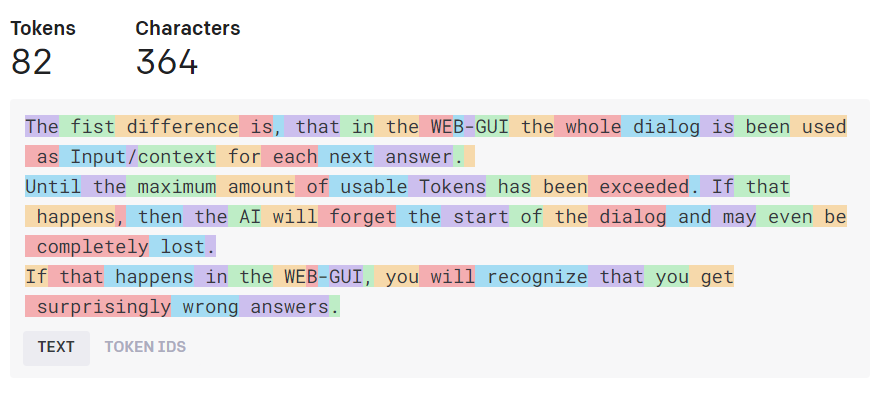

Before we can discuss the details, you need to know the concept of Tokens. In natural language processing (NLP), a "token" typically refers to a unit of text.

In the simplest sense, tokens can be thought of as words.

For example, the sentence "I love AI" can be broken down into three tokens: "I", "love", and "AI".

However, tokens can also represent smaller units such as characters or subwords, or larger units like sentences, depending on the context.

See picture below.

When it comes to AI LLM-models, tokens play a crucial role in how text is processed.

These models read text in chunks called tokens.

Managing tokens is an important aspect of using chat models. Tokens are chunks of text that models read, and the total number of tokens in an API call affects the cost, time taken, and whether the API call works at all.

For gpt-3.5-turbo, the maximum limit is 4096 tokens. Both input and output tokens count toward these quantities.

For example, GPT-3, one of OpenAI's language models, is capable of understanding and generating human-like text by predicting the probability of a sequence of tokens.

Now, let's talk about OpenAI's language models and tokens.

OpenAI’s models, like GPT-3, are not just limited to English; they can process text in multiple languages.

Additionally, a single token can represent a whole word, a part of a word, or even a single character, depending on the language and context.

For example, the word "chatbot" might be a single token, but in some languages or contexts, it might be split into multiple tokens like "chat" and "bot".

There's also a concept of "maximum available tokens" for OpenAI models.

Do you remember times when first computers had a maximum capacity of 4 KB?

This is where we are in terms of AI now.

This is essentially the maximum number of tokens that a model can process in a single request or operation.

For example, GPT-3 has a maximum token limit of 4096 tokens (01.06.2023).

This means that if you want GPT-3 to process a text, the total number of tokens in that text must not exceed 4096.

The Token-Limits includes both the input and output tokens.

If the text is too long, you would need to truncate or shorten it to fit within this limit.

Otherwise the Model will forget the start of the text when reading the end.

If you want to experiment with Tokens, you can use the Open AI Online Tokenizer.

It's important to note that token limits are not necessarily fixed and may change over time as models are updated or new models are released.

Additionally, different models may have different token limits.

What is the difference between using the SPR and using the WEB-Interface from ChatGPT?

Using the WEB-GUI, the whole dialog is been used as Input/context for each next answer.

Until the maximum amount of usable Tokens has been exceeded. If that happens, then the AI will forget the start of the dialog and may even be completely lost.

If that happens in the WEB-GUI, you will recognize that you get surprisingly wrong answers.

Using Open AI via the SPR this is generally NOT the case.

First you can set the maximum amount of Tokens to use using the Command AIC.Set MaxToken.

And then every AIC.Ask_Chat - Command is a completely new command and does by itself not remember anything that was before.

This way saving you a lot of Token.

Using the SPR you can use more Tokens, because any Chat is generally "NEW" and starts with the Full Amount of Tokens that are available,

and is only limited by using the Command AIC.Set MaxToken and the maximum Tokens of the used Model.

Using the Ask Chat-Command you do not automatically include all previous Questions and answers into the AI-Processing.

Every Ask Chat-Command start completely new, this way saving Tokens (and costs) and also having more Tokens left for your answer.

You can access the Chat-History using the AIC.Get History - Command and other AI - History Commands and this way

include parts or all of earlier chats into the current prompt. But mostly this does not make sense here.

Therefore the rule here is:

Include all needed Instruction and Samples into the current Prompt.

You can get the history of the chat, and the last Question, or the last Answer using the Commands:

AIC.Get_History|$$HIS

AIC.Get_Last_Question|$$QUE

AIC.Get_Last_Answer|$$ANS

OpenAI currently offers two chat models that can be used with the so called "chat completion endpoint",

namely gpt-3.5-turbo and gpt-4.

These models can be used to build various applications such as:

• drafting emails,

• writing Python or other code,

• answering questions,

• creating conversational agents,

• tutoring,

• language translations,

• simulating characters

• use them for for video games

• among others.

The AIC.Ask_Chat command works by taking your Prompt as input and returns a model-generated message as output.

As models don't have memory of past requests, so all relevant information must be supplied via the current Prompt.

It is worth noting that the Chat API, accessed through AIC.Ask Chat, grants entry to a more advanced set of models, which, though potent in capabilities, may come with a higher price tag. In summary:

AIC.Ask_Chat: Ideal for more complext conversations, accesses advanced AI models like GPT 3.5 and 4.

AIC.Set_Model_Completion: Best suited for single-turn tasks, and "cheap tasks".

Users are advised to choose the appropriate command based on the complexity and nature of the tasks they wish to accomplish with the SPR system.

Alternative to giving a Model-Name, you can specify a Model using a number, like this:

' Here we would specify "gpt-3.5-turbo"

AIC.Set_Model_Chat|3

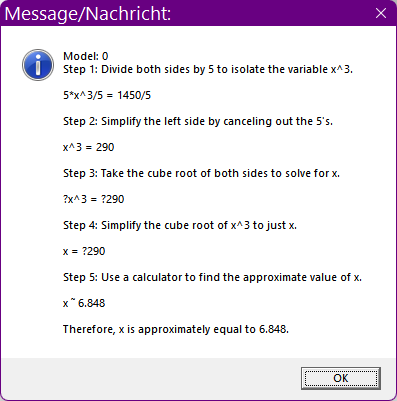

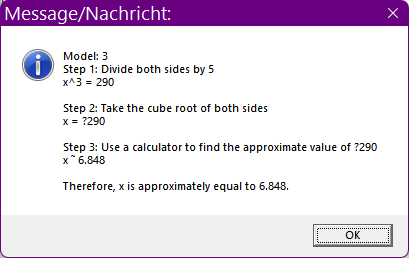

Here is a Test-Script that you can use to see the answers of the models to a Problem.

Due to the complexity i have increased the number of maximum Tokens.

' Set OpenAI API-Key from the saved File

AIC.SetKey|File

FOR.$$LEE|1|3

' Set Model

AIC.SetModel_Chat|$$LEE

' Set Model-Temperature

AIC.Set_Temperature|0

' Set Max-Tokens (Possible lenght of answer, depending on the Model up to 2000 Tokens which is about ~6000 characters)

' The more Tokens you use the more you need to pay. But the longer Input and Output can be.

AIC.SetMax_Token|300

' Ask Question and receive answer to $$RET

$$QUE=Act as a mathematician.Calculate x for the formula "5*x^3=1450". Do it step-by-step

AIC.Ask_Chat|$$QUE|$$RET

CLP.$$RET

MBX.Model: $$LEE $crlf$$$RET

NEX.

:enx

ENR.

Model Number |

Model Name |

Comments |

1 |

gpt-4 |

most actual Model |

2 |

gpt-4-32k |

most actual Model with 32k Tokens (~96 kb Text In/Out) |

3 |

gpt-3.5-turbo |

Standard Model |

4 |

gpt-3.5-turbo-16k |

Standard Model with 16k Tokens (~48 kb Text In/Out) |

If you specify "0", the default Model here is Nr.3.

Here are some highlights about GPT-4 and ChatGPT with the GPT-3.5-turbo engine.

GPT-4 is a newer language model developed by OpenAI, whereas GPT-3.5-turbo is the default engine within the ChatGPT family.

Functions and Applications:

•Both GPT-4 and GPT-3.5-turbo can be used to

• draft emails,

•write code,

•answer questions about documents,

•create conversational agents,

•give software a natural language interface,

•tutor in various subjects,

•translate languages,

•simulate characters for video games,

and much more.

GPT-4 has broad general knowledge and domain expertise and can follow complex instructions in natural language and solve difficult problems with accuracy.

Conversations can be as short as 1 message or fill many pages, and including the conversation history helps when user instructions refer to prior messages.

Tokens:

Language models read text in chunks called tokens. A token can be as short as one character or as long as one word.

Both input and output tokens count toward the total tokens used in an API call.

The total number of tokens affects the cost, time, and whether the API call works at all.

Pricing (per 19.09.2023 - prices are subject to change at any time):

Model |

Version |

Input Cost |

Output Cost |

4K context |

GPT-3.5 Turbo |

$0.0015 / 1K tokens |

$0.002 / 1K tokens |

8K context |

GPT-4 |

$0.03 / 1K tokens |

$0.06 / 1K tokens |

16K context |

GPT-3.5 Turbo |

$0.003 / 1K tokens |

$0.004 / 1K tokens |

32K context |

GPT-4 |

$0.06 / 1K tokens |

$0.12 / 1K tokens |

Both models are powerful tools for natural language processing and can be used for a wide range of applications. GPT-4 is the newer model and is likely to have improvements over GPT-3.5-turbo. However, GPT-3.5-turbo is much more cost-effective, especially for applications that don't require the absolute cutting edge in language model performance.

Syntax

AIC.Ask Chat|P1[|P2][|P3]

AIC.Ask|P1[|P2][|P3]

Parameter Explanation

P1 - <Prompt/Question>: This is your question / instruction to the AI.

P2 - opt. Variable to return the result / answer from the AI.

P3 - opt. 0/1 - Flag: This flag is optional and is used to specify how the results should be returned when multiple results are expected. If you have set the number of expected results to a value higher than 1 using AIC.Set Number, this flag determines how the results are returned. If set to "1", only the last result will be returned. If set to "0" (or left as the default), all results will be returned.

Example

'*****************************************************

' EXAMPLE 1: AIC.-Commands

' Here we let the AI Calculate x for the formula "5*x^3=1450"

'

'*****************************************************

' Set OpenAI API-Key from the saved File

AIC.SetKey|File

FOR.$$LEE|0|11

' Set Model

AIC.SetModel_Chat|$$LEE

' Set Model-Temperature

AIC.Set_Temperature|0

' Set Max-Tokens (Possible lenght of answer, depending on the Model up to 2000 Tokens which is about ~6000 characters)

' The more Tokens you use the more you need to pay. But the longer Input and Output can be.

AIC.SetMax_Token|1000

' Ask Question and receive answer to $$RET

$$QUE=Act as a mathematician.Calculate x for the formula "5*x^3=1450". Do it step-by-step

AIC.Ask_Chat|$$QUE|$$RET

CLP.$$RET

MBX.Model: $$LEE $crlf$$$RET

NEX.

:enx

ENR.

Note that the Answer-Text is cut off at the end if you have specified a too small number of maximum Tokens in the Script.

Remarks

In your Prompts, ensure Clarity and Precision: Articulate your prompt in a way that unambiguously communicates the desired output from the model. Refrain from using vague or open-ended language, as this can yield unpredictable outcomes.

Incorporate Pertinent Keywords: Embed keywords in the prompt that are directly associated with the subject matter. This guides the model in grasping the context and subsequently producing more precise content.

Supply Contextual Information: Should it be necessary, furnish the model with background information or context. This equips the model to formulate more informed and contextually relevant responses.

Engage in Iterative Refinement: Embrace the process of experimentation with a variety of prompts to ascertain which is most effective. Continuously refine your prompts in response to the output generated, making adjustments until the desired results are achieved.

Limitations:

-

See also:

• Set_Key

•