MiniRobotLanguage (MRL)

AIC.Set_Model_Chat

Choose one of the Open AI Models that are available for the "Chat endpoint".

The Chat Endpoint Models try to give you the best possible answer to your instructions/questions.

Intention

The AIC.Set_Model_Chat command is used for specifying the OpenAI model you want to use for the

AIC.Ask Chat - Command.

The syntax of this command is

AIC.Set_Model_Chat|<Modelname or Model-Number>

where <Modelname> is the name of the OpenAI model you want to use. The default model set by this command is gpt-3.5-turbo.

At the time of writing, OpenAI offers two chat models that can be used with the chat completion endpoint, namely gpt-3.5-turbo and gpt-4.

These models can be used to build various applications such as drafting emails, writing Python code, answering questions, creating conversational agents, tutoring, language translation, and simulating characters for video games among others.

Both commands:

AIC.Set_Model_Chat: accesses the advanced AI models like GPT 3.5 and 4.

and

AIC.Set_Model_Completion: Best suited for single-turn tasks, and "cheap tasks".

do internally use the same "Model-Register", therefore they will overwrite any Model that was selected before.

Alternative to giving a Model-Name, you can specify a Model using a number, like this:

' Here we would specify "gpt-3.5-turbo"

AIC.Set_Model_Chat|3

Number (P1) |

Model (P1) |

Comments |

|---|---|---|

1 |

gpt-3.5-turbo |

A variant of GPT-3.5 with enhanced performance and speed, suitable for responsive and efficient tasks. |

2 |

gpt-4 |

Represents the next generation of language models with advanced comprehension and contextual capabilities. |

3 |

gpt-3.5-turbo-1106 |

A specific iteration of the GPT-3.5 turbo model, likely optimized for certain tasks or features. |

4 |

gpt-3.5-turbo-16k |

An extended version of GPT-3.5 turbo, possibly with increased knowledge base or specialized capabilities. |

5 |

gpt-4-1106-preview |

An early or preview version of GPT-4, showcasing newer features or improvements over previous models. |

6 |

gpt-4-vision-preview |

A version of GPT-4 with integrated vision capabilities, indicating a blend of language and visual processing. |

0 |

gpt-3.5-turbo-1106 |

Default model, a variant of GPT-3.5 turbo for general use, balancing performance and features. |

Temporary Model for 2023:

The following two Models have been announced by OpenAI in 11/2023 and are temporary, why we did not hard-code these.

To select these, use directly the name.

New GPT-4 Turbo: "gpt-4-1106-preview" (Use AIC.Ask_Chat)

We announced GPT-4 Turbo, our most advanced model. It offers a 128K context window and knowledge of world events up to April 2023.

We’ve reduced pricing for GPT-4 Turbo considerably: input tokens are now priced at $0.01/1K and output tokens at $0.03/1K, making it 3x and 2x cheaper respectively compared to the previous GPT-4 pricing.

We’ve improved function calling, including the ability to call multiple functions in a single message, to always return valid functions with JSON mode, and improved accuracy on returning the right function parameters.

Model outputs are more deterministic with our new reproducible outputs beta feature.

You can access GPT-4 Turbo by passing gpt-4-1106-preview in the API, with a stable production-ready model release planned later this year.

Updated GPT-3.5 Turbo: "gpt-3.5-turbo-1106" (Use AIC.Ask_Completion)

The new gpt-3.5-turbo-1106 supports 16K context by default and that 4x longer context is available at lower prices: $0.001/1K input, $0.002/1K output. Fine-tuning of this 16K model is available.

Fine-tuned GPT-3.5 is much cheaper to use: with input token prices decreasing by 75% to $0.003/1K and output token prices by 62% to $0.006/1K.

gpt-3.5-turbo-1106 joins GPT-4 Turbo with improved function calling and reproducible outputs.

Here are some highlights about GPT-4 and ChatGPT with the GPT-3.5-turbo engine.

GPT-4 is a newer language model developed by OpenAI, whereas GPT-3.5-turbo is the default and even faster engine within the ChatGPT family.

Functions and Applications:

•Both GPT-4 and GPT-3.5-turbo can be used to

• draft emails,

•write code,

•answer questions about documents,

•create conversational agents,

•give software a natural language interface,

•tutor in various subjects,

•translate languages,

•simulate characters for video games,

and much more.

GPT-4 has broad general knowledge and domain expertise and can follow complex instructions in natural language and solve difficult problems with accuracy.

Conversations can be as short as 1 message or fill many pages, and including the conversation history helps when user instructions refer to prior messages.

GPT-3.5 is cheaper, is faster and can solve simple problems as well.

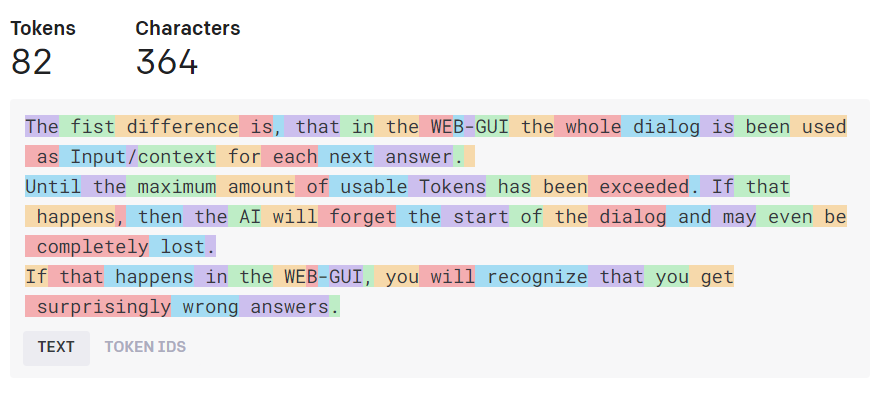

Tokens:

Language models read text in chunks called tokens. A token can be as short as one character or as long as one word.

Both input and output tokens count toward the total tokens used in an API call.

The total number of tokens affects the cost, time, and whether the API call works at all.

Here you can see how a Text is divided into Tokens before being processed using the AI.

Both models are powerful tools for natural language processing and can be used for a wide range of applications.

GPT-4 is the newer model and is likely to have improvements over GPT-3.5-turbo.

However, GPT-3.5-turbo is faster and much more cost-effective, especially for applications that don't require the absolute cutting edge in language model capabilities.

Syntax

AIC.Set Model Chat|P1

AIC.SMH|P1

Parameter Explanation

P1 - Model-Name, can be a number (see Table above) or directly the name of the model to use.

Example

'*****************************************************

' EXAMPLE 1: AIC.-Commands

'*****************************************************

' Set OpenAI API-Key from the saved File

AIC.SetKey|File

' Set Model

AIC.SetModel_Chat|4

' Set Model-Temperature

AIC.Set_Temperature|0

' Set Max-Tokens (Possible lenght of answer, depending on the Model up to 2000 Tokens which is about ~6000 characters)

' The more Tokens you use the more you need to pay.

AIC.SetMax_Token|25

' Ask Question and receive answer to $$RET

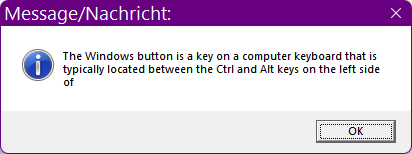

AIC.Ask_Chat|What is a "Windows Button"?|$$RET

MBX.$$RET

:enx

ENR.

Note that the Answer-Text is cut off at the end because i have specified a maximum of 25 Tokens in the Script.

Remarks

-

Limitations:

-

See also:

• Set_Key